Your Company Is Going Through AI Puberty (And It Shows)

Why the chaos you're feeling isn't a training gap or a strategy problem. It's an organizational development challenge. Plus: A maturity framework that actually explains what's happening.

If you’re still asking “how do we get our team to use AI more,” you’re solving the wrong problem.

That’s like asking how to get a teenager to be taller. The height is a symptom. What you’re actually dealing with is a developmental stage. One that’s awkward, volatile, and (the uncomfortable part) can’t be skipped.

You’re not implementing technology. You’re going through a rite of passage that will determine whether your organization matures or self-destructs.

Let me explain.

The Framing That Changed How I See This

Dario Amodei, CEO of Anthropic, recently published an essay arguing that humanity is entering a “technological adolescence.” His point: we’re about to be handed almost unimaginable power, and it’s deeply unclear whether our institutions are mature enough to wield it.

I’ve been sitting with that framing for the last few days. Not because I’m worried about civilization (I mean, I am, but that’s not actionable for most of us). I keep coming back to it because I see the same pattern at organizational scale.

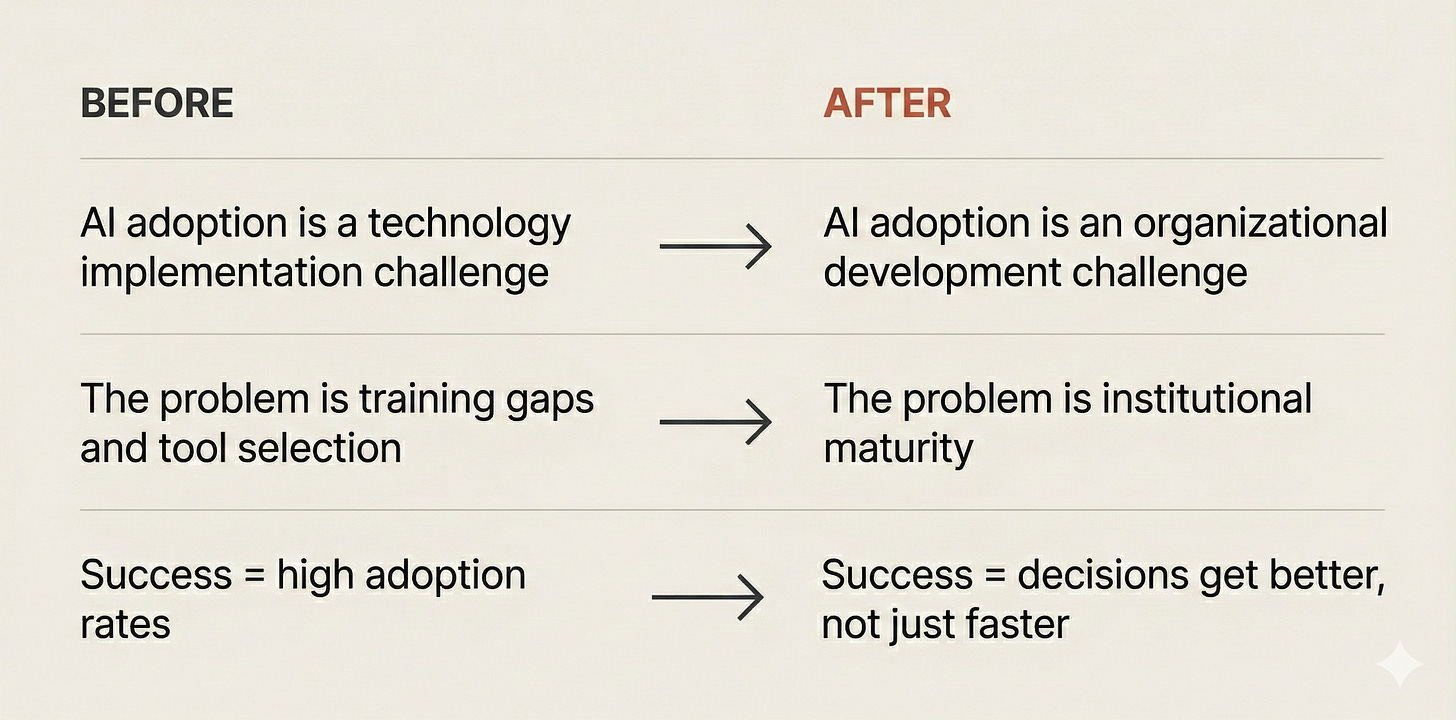

The risks Amodei describes for civilization? They show up in miniature inside companies.

Every organization experimenting with AI right now is going through its own adolescence. Gaining capabilities faster than it’s developing judgment. Experiencing volatility it doesn’t understand. Making decisions without the institutional maturity to handle the consequences.

And just like human adolescence, you can’t skip it. You can only handle it well or poorly.

What’s Actually In Here This Article

Understand why “AI adoption” is the wrong frame

See how civilizational-scale AI risks map to your organizational reality

Get a maturity framework that tells you what stage you’re actually in

Learn why governance needs to come before scale

Recognize the signs your organization is mid-adolescence

Know what “making it to adulthood” actually looks like

The Shift You Need to Make

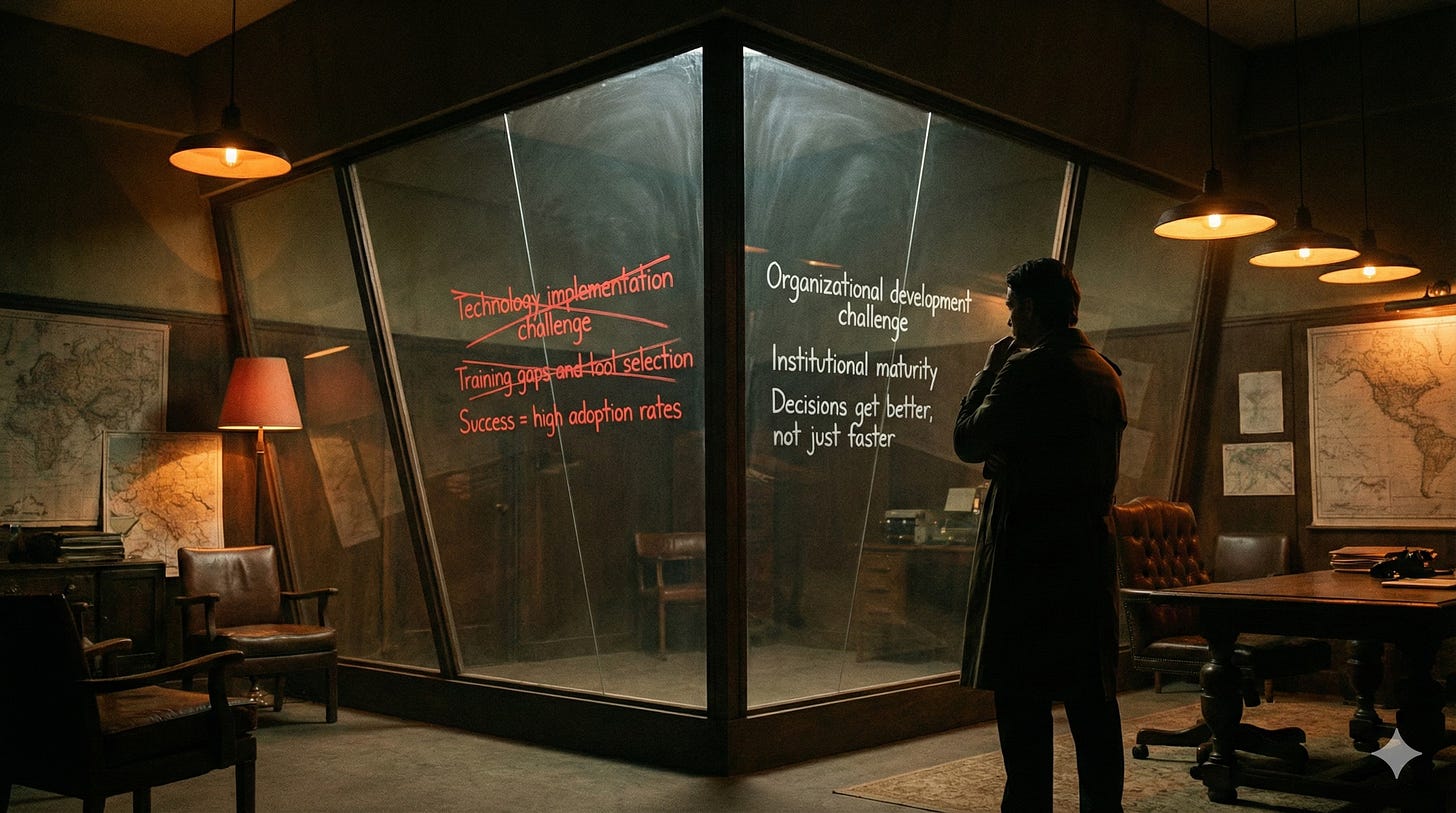

The reframe:

Most organizations treat AI like they treated the shift to cloud or the rollout of Slack. Buy tools. Train users. Measure adoption. Move on.

That worked for technologies that augmented existing workflows. AI doesn’t just augment. It transforms who makes decisions and how those decisions get made.

Your adoption rate is not your maturity level. I’ve seen organizations with 90% adoption and zero maturity. Tools everywhere. Judgment nowhere.

The Organizational AI Maturity Framework

Five stages. Most organizations are stuck between 1 and 2. The jump from 1 to 2 is often the hardest.

1. Experimentation (Chaos)

Everyone’s using different tools. No visibility into what’s happening. Shadow IT everywhere. Some people are prompting Claude for strategy docs. Others are using ChatGPT to draft emails. A few are running sensitive data through tools nobody vetted.

Leadership is either oblivious or panicked. There’s no policy because nobody knows what to make policy about.

This is where 70% of organizations are right now. If you’re here, that’s fine. But you can’t stay.

2. Awareness (Recognition)

You’ve mapped what’s actually being used. You know who’s experimenting and what’s working. You haven’t solved anything yet, but you can see the landscape.

This stage feels unproductive because you’re gathering information instead of shipping solutions. Resist the urge to skip it. Visibility before strategy. Every time.

3. Governance (Structure)

You’ve built the minimum viable rules. Not a 47-page policy (nobody reads those). A few clear lines about what’s in bounds, what’s not, and how to escalate when you’re unsure.

People follow these rules because they’re reasonable, not because they’re mandated. The test: can a new employee understand your AI boundaries in under 5 minutes?

4. Integration (Workflow)

AI is embedded in how work actually gets done. Not as a side experiment. As infrastructure.

Decisions about AI use are distributed, not centralized. Teams don’t need to ask permission for every new tool. They have the judgment to make those calls. The training wheels are off.

5. Maturity (Identity)

Your organization’s relationship with AI is part of who you are. You know what you use it for, what you don’t, and why.

When a new capability drops (and they drop constantly), your team knows how to evaluate it without escalating to leadership. You can adapt without starting from scratch every time.

Most organizations won’t reach Stage 5 for years. That’s okay. The goal isn’t to rush to maturity. The goal is to know where you are and what the next stage requires.

Why This Framing Matters (The Proof)

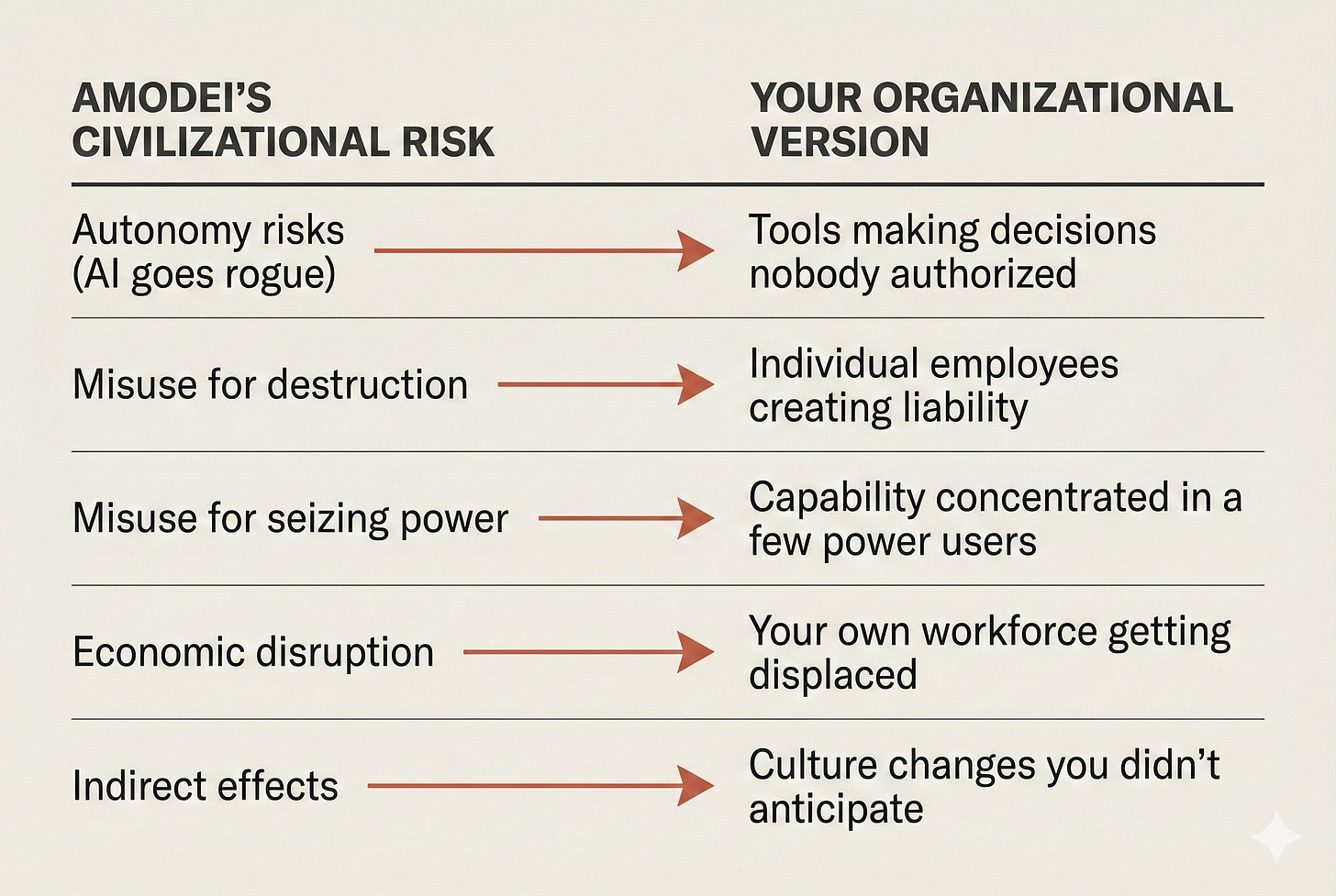

Amodei lists five risks that powerful AI poses to civilization. Each one has an organizational equivalent. This isn’t metaphor. It’s structural parallel.

Look at that list. If your organization is in Stage 1 (Chaos), you’re experiencing all five right now. You just might not have named them.

The employee who’s using AI to draft client communications without review? That’s your autonomy risk.

The person running confidential data through an unvetted tool? Misuse potential.

The three people who’ve figured out how to 10x their output while everyone else struggles? Power concentration.

The junior employees who are terrified their jobs are disappearing? Economic disruption in miniature.

The weird vibe in meetings where nobody knows if the ideas are human-generated anymore? Indirect effects.

You don’t need Amodei’s essay to tell you this is happening. You can feel it. What you might need is language for it.

Three Moves You Can Make This Week

Frameworks are useless without action. What you can do in the next 5 days.

1. Run an AI Audit (Stage 1 → Stage 2)

Before you strategize, map what’s actually happening. Simple survey to your team:

What AI tools are you currently using?

What are you using them for?

What’s working? What isn’t?

Don’t make it feel like surveillance. Frame it as curiosity. You’re trying to learn, not catch anyone.

You can’t govern what you can’t see. This is the first step.

2. Define 3-5 Clear Boundaries (Stage 2 → Stage 3)

Not a comprehensive policy. Just:

What’s definitely in bounds? (Using AI to draft internal docs, brainstorm ideas, etc.)

What’s definitely out of bounds? (Client-facing content without review, confidential data in unvetted tools, etc.)

What requires escalation? (New tools, new use cases, anything you’re unsure about)

Write this on one page. If it doesn’t fit on one page, it’s too long.

3. Name Your Maturity Stage Out Loud (Any Stage)

Tell your team where you think you are. Stage 1? Say it. Stage 2? Own it.

Then invite them to disagree. “I think we’re in Stage 2. We have visibility but not governance. What do you think?”

The conversation itself is developmental. Naming where you are creates shared reality. Shared reality enables coordinated action.

When This Framing Doesn’t Help

Honesty builds trust. Where this framework falls apart:

If you’re a solo operator or tiny team. You don’t have “organizational” maturity to develop. You’re just experimenting. That’s fine.

If you’re in a highly regulated industry where AI governance is already mandated. You may be forced to skip stages or follow external frameworks. This one won’t replace those.

If your leadership has already decided AI is either “the future” or “a fad.” No framework survives contact with a closed mind.

If you’re looking for permission to move slower. This isn’t an excuse to wait. Adolescence doesn’t pause because you’re not ready.

The point isn’t to slow down. It’s to know where you are so you can move with intention instead of chaos.

The Uncomfortable Truth

Your organization’s AI puberty is awkward for the same reason human puberty is awkward.

You’re gaining capabilities faster than you’re developing judgment.

The tools are getting smarter. Your processes aren’t keeping up. Your people are experimenting, sometimes brilliantly, sometimes recklessly. And nobody’s quite sure who’s in charge of making sense of it all.

The only way out is through. But “through” doesn’t mean faster.

It means being honest about where you actually are.

Your Dare

Run the audit. This week. Before you write another strategy doc or evaluate another tool.

Ask your team: What AI are you actually using right now?

I promise the answers will surprise you. And those surprises are the foundation of everything else.

Reply with what you find. I’m collecting patterns.

P.S. This is the first in a series. I’ll have a follow up to this in the coming week.

Next up: The Surgical Intervention Principle, why your 47-page AI policy is making things worse, and what to do instead.

Try This Prompt

Want to run a quick self-assessment? Use this:

For ChatGPT/Claude:

I'm trying to assess my organization's AI maturity level. Here's a framework with 5 stages:

1. Experimentation (Chaos) - No visibility, shadow IT, no governance

2. Awareness (Recognition) - Mapped what's being used, but no rules yet

3. Governance (Structure) - Minimum viable rules that people actually follow

4. Integration (Workflow) - AI embedded in how work gets done, distributed decisions

5. Maturity (Identity) - Clear organizational identity around AI use, can adapt to new capabilities

Based on this framework, ask me 5-7 diagnostic questions to help me figure out which stage my organization is in. After my answers, tell me:

- Which stage we're likely in

- What the biggest gap is between our current stage and the next one

- One specific action to close that gap

Keep it practical. I run a [describe your org size/type].For Perplexity:

What are the most common signs that an organization is stuck in early AI adoption chaos vs. having real AI governance maturity? Include specific examples and warning signs.Good Luck - Dan