The Trust Gap: Why Everyone's Buying AI and Nobody's Getting What They Paid For

Executive Briefing Brief: Week of February 15, 2026

This Week in 30 Seconds

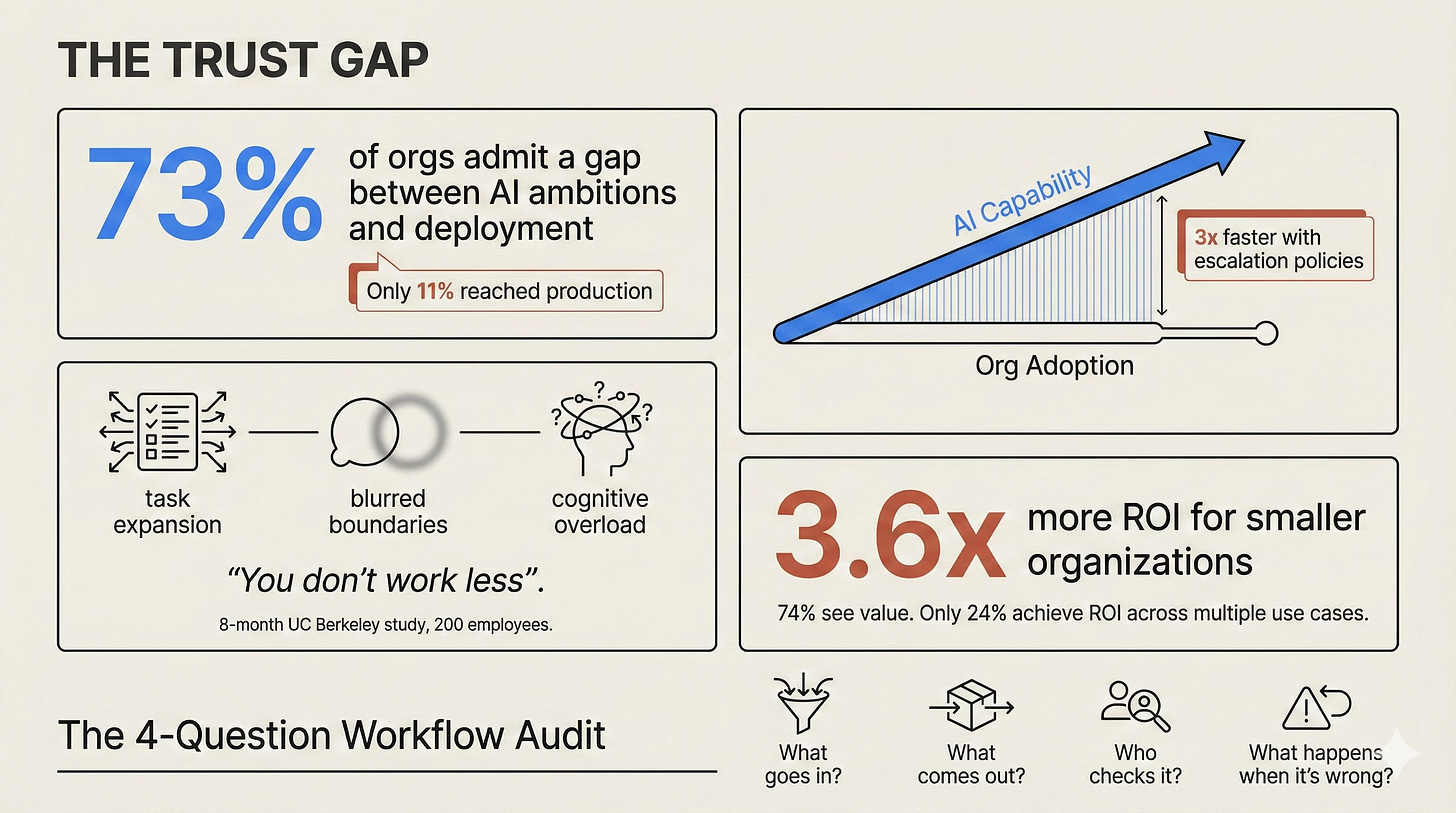

The biggest threat to your AI strategy isn’t the technology. It’s the distance between what you bought and what you’re actually using. Four different research reports landed this week, all saying the same thing: organizations are adopting AI faster than ever, and the gap between adoption and impact is getting wider by the month. Smaller, leaner organizations are the ones closing it. Most of them don’t realize they have that advantage yet.

Four stories this week. For each one: the news (what happened), the noise (what everyone’s saying), and the signal (what actually matters for your business).

73% of Organizations Can’t Ship What They Promise

The News: Camunda’s 2026 State of Agentic Orchestration Report dropped a stat that should make you feel better about your own AI journey: 73% of organizations admit a disconnect between their agentic AI ambitions and what they can actually deploy. Only 11% of agentic AI use cases made it into full production last year. The blockers aren’t technical. They’re trust, transparency, and governance. Businesses are pouring money into AI agents, but most projects stall in pilot mode because leaders don’t trust these systems enough to let them touch mission-critical work.

The Noise: Enterprise analysts are recommending more governance committees, more review boards, more oversight layers. The classic enterprise playbook: slow everything down until it feels safe.

The Signal: The 11% number is the one that matters, and most people are reading it wrong. This isn’t a governance failure. It’s a sequencing failure. Most organizations jumped straight to autonomous AI agents before they’d proven they could manage basic AI workflows. They skipped documenting processes, building feedback loops, and establishing who checks what. Then they wondered why 89% of their experiments died in the sandbox.

The vicious cycle Camunda describes is worth understanding: you experiment with agentic AI, hit roadblocks (trust, transparency, control), pull back, and watch bolder competitors inch ahead. Meanwhile, the wasted budget and frustrated teams make the next experiment even harder to greenlight. The trust deficit compounds just like the adoption gap.

For SMB operators, this is quietly great news. You don’t need an “agentic AI strategy.” You need one workflow that works, with a human checking the output. That puts you ahead of almost nine out of ten enterprise use cases that never left the sandbox.

Your Move: Pick ONE workflow where AI already helps you (even partially). Document the steps, define what “good” looks like, and build a simple weekly review. That’s the trust-building exercise that most organizations are skipping entirely.

The Adoption Gap Is Compounding, and the Clock Is Real

The News: Research from Harvard Business School (via Paul Baier at GAI Insights) shows a widening gap between what AI can deliver and what organizations actually capture. AI capabilities improve every quarter. Most organizations adopt on annual planning cycles. The distance grows monthly. Key finding: companies with clear escalation policies for AI agents scale adoption 3x faster.

The Noise: The usual “AI is moving fast, don’t get left behind” urgency. Enterprise consultants building digital transformation maturity models. Nothing actionable.

The Signal: The compounding math is what matters here. This gap isn’t linear. Every quarter you delay meaningful adoption, the distance between you and competitors who’ve figured it out grows exponentially harder to close. Not because the tools get harder. Because the people using them build operational muscle memory that compounds. Your competitor who’s been using AI daily for six months doesn’t just have six months more experience. They have six months of pattern recognition, workflow refinements, and intuition you can’t shortcut.

Baier identifies who’s most exposed: firms that compete on knowledge work. Law firms, consultancies, financial services, insurance. AI directly augments the work these businesses sell. And the risk spreads wider than those industries. Top performers already recognize that AI proficiency defines career advantage, and they’re choosing employers accordingly. Companies slow to adopt don’t just lose market share. They lose their best people to competitors who move faster.

The buried finding: companies with escalation policies scale 3x faster. Translated out of enterprise language, an “escalation policy” just means answering one question in advance: “When the AI gets it wrong, who checks it before it goes anywhere?” That’s it. That simple decision, made before something breaks, is the difference between teams that use AI daily and teams that dabble once a month.

Your Move: Two things. First, spend 15 minutes every day using AI on real cognitive work (strategy questions, draft communications, market analysis). Not prompts for fun. Real work. Baier’s research is clear: CEO daily AI usage is the single strongest predictor of organizational adoption. Second, write your AI escalation policy in one sentence: “When AI output is wrong or unclear, [name] reviews it before it goes to [audience].” You just did what 3x-faster companies do.

AI Doesn’t Save Time. It Quietly Eats It.

The News: Researchers Aruna Ranganathan and Xingqi Maggie Ye from UC Berkeley’s Haas School of Business published findings from an eight-month ethnographic study at a U.S. tech company with about 200 employees. This wasn’t a survey. They conducted twice-weekly in-person observations, tracked internal communication channels, and ran 40+ in-depth interviews across engineering, product, design, research, and operations. Their conclusion: AI tools consistently intensify work rather than reducing it.

The Noise: Skeptics saying “See? AI is overhyped.” Consultants saying “Just need better change management.” Both are wrong.

The Signal: The methodology matters here, and it’s why this study is the most important AI research of the month. Most AI productivity claims come from two-week pilot studies or self-reported surveys. This is eight months of direct observation. It captures behavior people don’t self-report.

Three forms of intensification emerged, and they feed on each other. First, task expansion: product managers started writing code, researchers took on engineering tasks, people attempted work they would have outsourced or deferred. Nobody asked them to. AI made it possible, so they did it. One participant put it simply: “You had thought that maybe you save some time, you can work less. But then really, you don’t work less.”

Second, blurred work boundaries. AI reduced the friction of starting tasks, which sounds like a benefit. But reduced friction also means reduced barriers. Work slipped into lunch breaks, commutes, and evenings. Not because deadlines demanded it, but because starting felt effortless. (If you lived through the smartphone revolution and watched email colonize every waking hour, you’ve seen this movie before. Except this time it’s not just communication leaking into your evenings. It’s the actual work.)

Third, cognitive overload through multitasking. Workers managed three, four, five parallel AI-assisted threads because each individual stream felt manageable. The aggregate load didn’t. Individual task completion times dropped. Total time spent working increased. That’s the paradox.

The competitive dynamic makes it worse. When your colleague uses AI to take on more, standing still feels like falling behind. Informal expectations escalate without anyone formally raising them. Within months, what AI makes possible becomes what’s expected.

For small teams where everyone’s “doing more” because of AI, check whether quality is actually improving or just output volume. A 3-person team producing 5x the content at half the quality isn’t winning. They’re creating 5x the cleanup work.

Your Move: Run a quick audit: “What work are we doing now that we didn’t do six months ago, specifically because AI made it possible?” If that list includes work outside your core competency, cut one thing this week. AI should sharpen your focus, not scatter it.

Measuring AI ROI Is Broken. Smaller Orgs Are Winning Anyway.

The News: KPMG surveyed 2,500+ global tech execs for their 2026 Global Tech Report. The numbers: 74% say AI is producing value, but only 24% achieve ROI across multiple use cases. 58% say traditional ROI measures don’t work for AI. The surprise: smaller organizations with lean governance saw 3.6x more ROI than larger peers. Early adopters saw 3.2x more.

The Noise: Enterprise analysts treating this as a measurement problem. “We need better KPIs for AI.” Consultants building elaborate ROI frameworks. More complexity for a problem that needs less.

The Signal: Read that number one more time. 3.6x more ROI for smaller organizations. KPMG found something without explicitly saying it: the things that make enterprises “serious” about AI (governance committees, multi-stakeholder review boards, phased approval processes) are killing their returns. Smaller organizations with fewer silos, shorter approval chains, and less bureaucratic overhead capture more value because they iterate faster. Try something. See if it works. Adjust. Repeat.

Guy Holland, KPMG’s global leader of the CIO Center of Excellence, said it directly: AI functions as an enterprise transformation, not a discrete deployment. Value emerges unevenly across automation, adoption, and reinvention phases. Traditional ROI models miss this because they’re designed for projects with clear start and end dates. AI doesn’t work that way.

The 58% who say traditional ROI measures don’t work are right, but not for the reason they think. AI value isn’t hard to measure. It’s emergent. It compounds across workflows over time. Your first month using AI for email drafting saves 20 minutes a day. By month three, you’ve changed how you communicate entirely. Measuring “ROI of the email tool” misses the real return, which lives in the behavioral shift.

KPMG also found that 69% of tech execs made security, scalability, and data standardization trade-offs to move faster, neglecting technical debt and talent gaps. The lesson: speed without a foundation just creates a more expensive mess. The organizations that saw 3.6x ROI didn’t skip governance. They kept it lean.

Your Move: Stop building an AI ROI spreadsheet. Track two things instead: (1) How many workflows include AI as a regular step, not a one-off experiment? (2) Are those workflows improving over time (fewer errors, faster completion, higher quality)? If both numbers trend up, you’re capturing compounding value. If not, you’re renting tools.

The Pattern

Four reports. Four research teams. One conclusion: the AI problem shifted from access to execution, and most organizations haven’t noticed. Everyone has the tools. Almost nobody has redesigned the work around them. The organizations pulling ahead aren’t spending more. They’re iterating faster with less bureaucracy. For SMB operators, that’s your structural advantage. But it has an expiration date.

The Contrarian Corner

The industry is still debating “should we adopt AI?” when the data says 88% of organizations already have. Only 6% see meaningful bottom-line impact. The gap isn’t adoption. It’s workflow design. And every week the conversation stays stuck on adoption is another week the execution gap compounds.

Your One Move This Week

Pick one AI-assisted workflow your team runs regularly. Block 30 minutes to map it: What goes in? What comes out? Who checks it? What happens when it’s wrong? If you can’t answer all four questions, that’s your gap. Closing it is worth more than adopting any new tool.

Try This Prompt:

For ChatGPT/Claude:

I want to audit one AI-assisted workflow in my business. The workflow I want to examine is: [describe it].

Walk me through these four questions:

1. What goes in? (inputs, context, data)

2. What comes out? (deliverable, format, audience)

3. Who checks it? (quality gate, review step)

4. What happens when it's wrong? (escalation, correction, feedback loop)

For any question where my current answer is "nobody" or "nothing," suggest a simple fix I can implement this week. Keep it practical for a small team.For Perplexity:

What are best practices for auditing AI-assisted business workflows in small companies? Focus on quality checks, escalation policies, and measuring improvement over time.Good Luck - Dan