Most "AI Skills" Aren't AI Skills At All — You Already Have What You Need

The 75/25 Framework: why you're more ready for AI than you think

Everyone’s racing to “learn AI skills” before they fall behind.

But you’re not actually behind.

The “AI skills gap” is a misnomer. Most people already possess the critical capabilities needed to work effectively with AI. They just don’t recognize how directly those skills transfer. The popular question everyone asks is “What AI skills do I need to learn?” The right question is “Which of my existing skills apply directly to AI collaboration?”

I’ve spent the last couple years leading teams through AI integration as a human-centered designer. I’ve watched talented professionals, people who excel at facilitation, problem-solving, and communication, freeze up when asked to “learn prompting.” The pattern became impossible to ignore: the skills gap wasn’t about AI at all.

I’ll show you.

What You’ll Get in This Piece

Discover the 75/25 split — Why three of the four critical AI capabilities are already in your toolkit

Learn the 75/25 Framework — The exact breakdown of what actually determines AI output quality

See the before/after shift — How the mental model changes when you recognize existing skills

Get concrete examples — How structured thinking, clear direction, and quality recognition directly apply across industries

Understand the 25% that’s actually new — What you genuinely need to learn about LLMs and verification

Access ready-to-use prompts — Starting templates that lean on your existing strengths

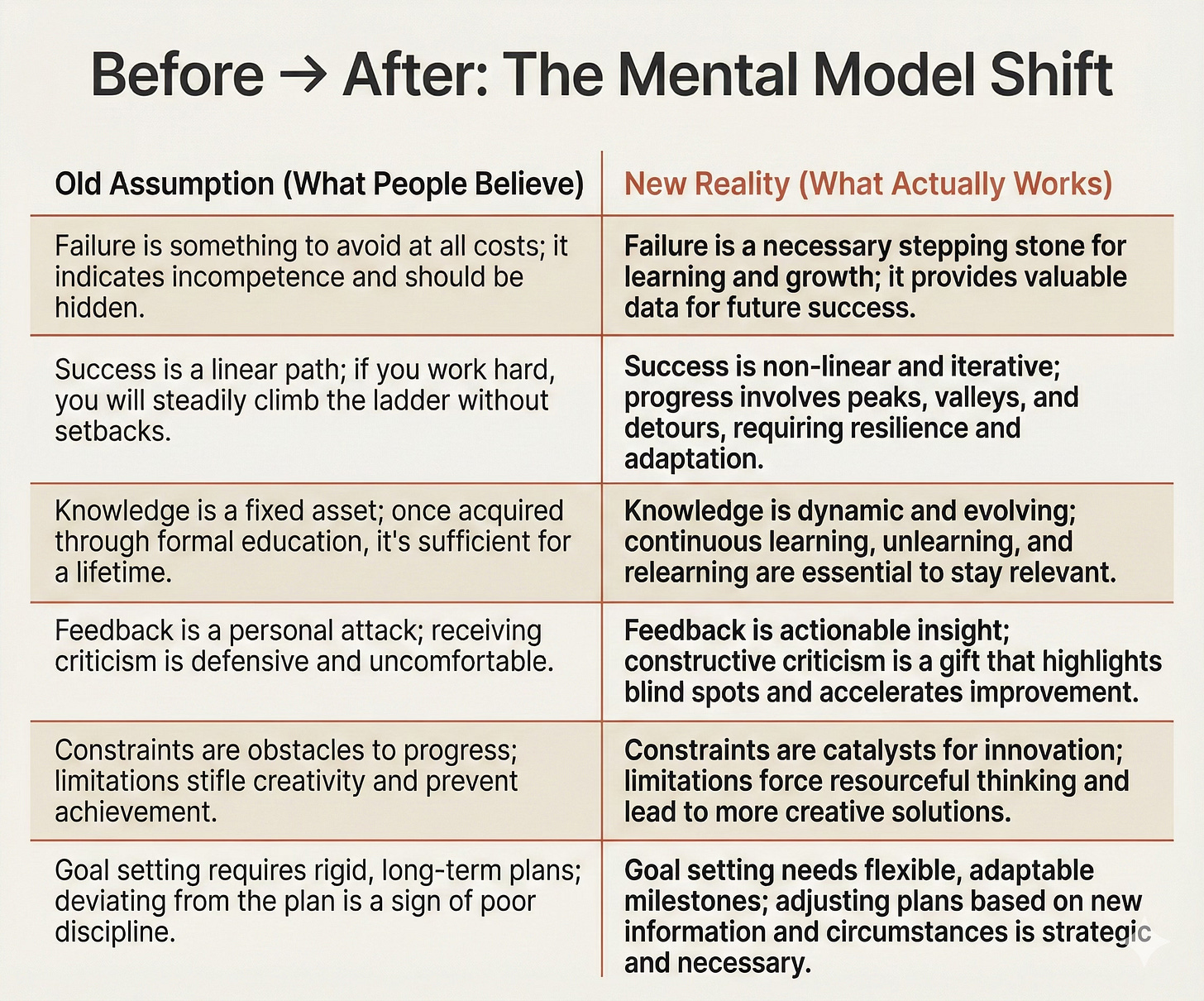

The Reframe That Changes Everything

The “AI skills gap” isn’t about missing capabilities. It’s about not recognizing that structured thinking IS an AI skill, clear communication IS an AI skill, and domain expertise IS an AI skill.

Think about that for a second.

You’ve been building these capabilities your entire career. Every project you’ve run. Every meeting you’ve facilitated. Every complex problem you’ve broken down into manageable parts. Every time you’ve had to explain technical concepts to non-technical stakeholders. Every domain where you can spot errors because you know what “right” looks like.

All of it applies directly to working with AI.

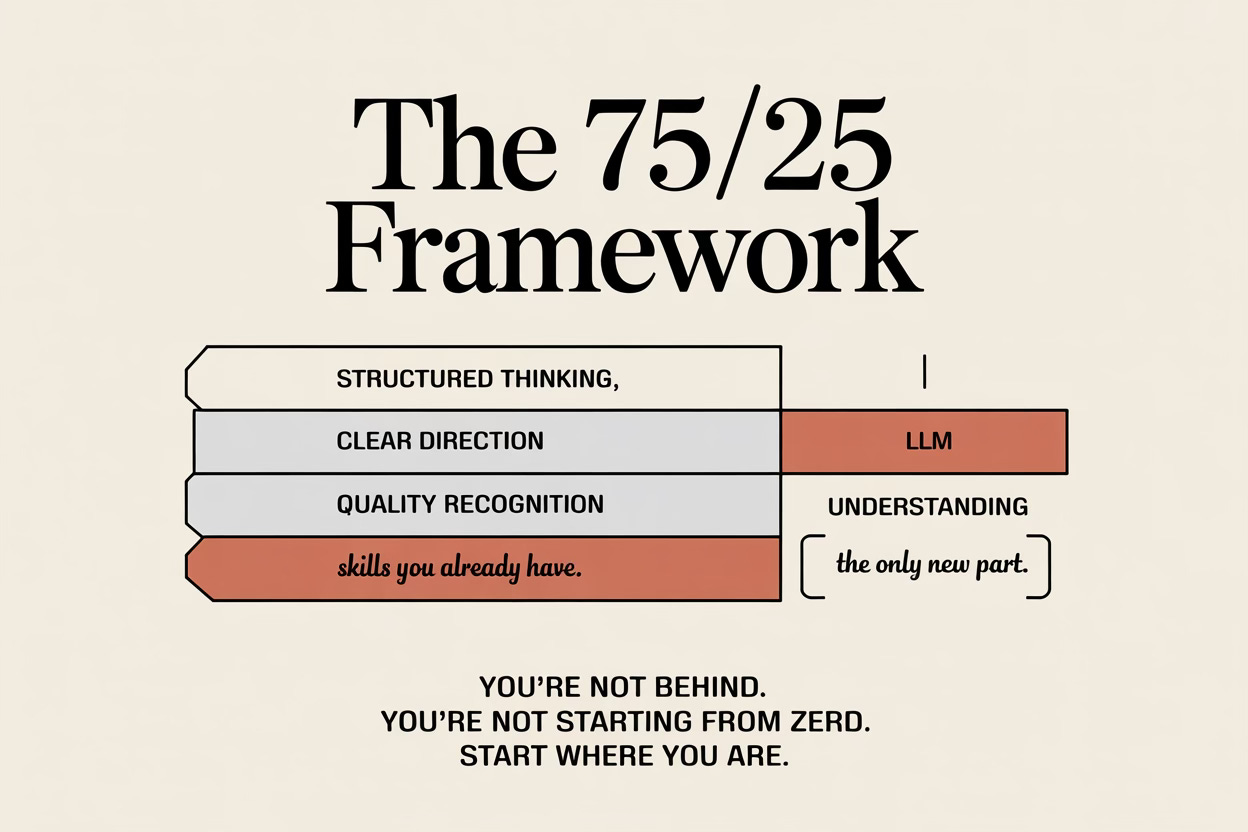

The 75/25 Framework

Most AI training gets it backwards. They start with “how to prompt” when they should start with “what you already know.”

Look, I get the confusion. When someone says “learn AI skills,” it feels like you’re starting from scratch. But that’s only true for 25% of what matters. The other 75%? You’ve been building it your whole career without realizing it.

This is how it breaks down.

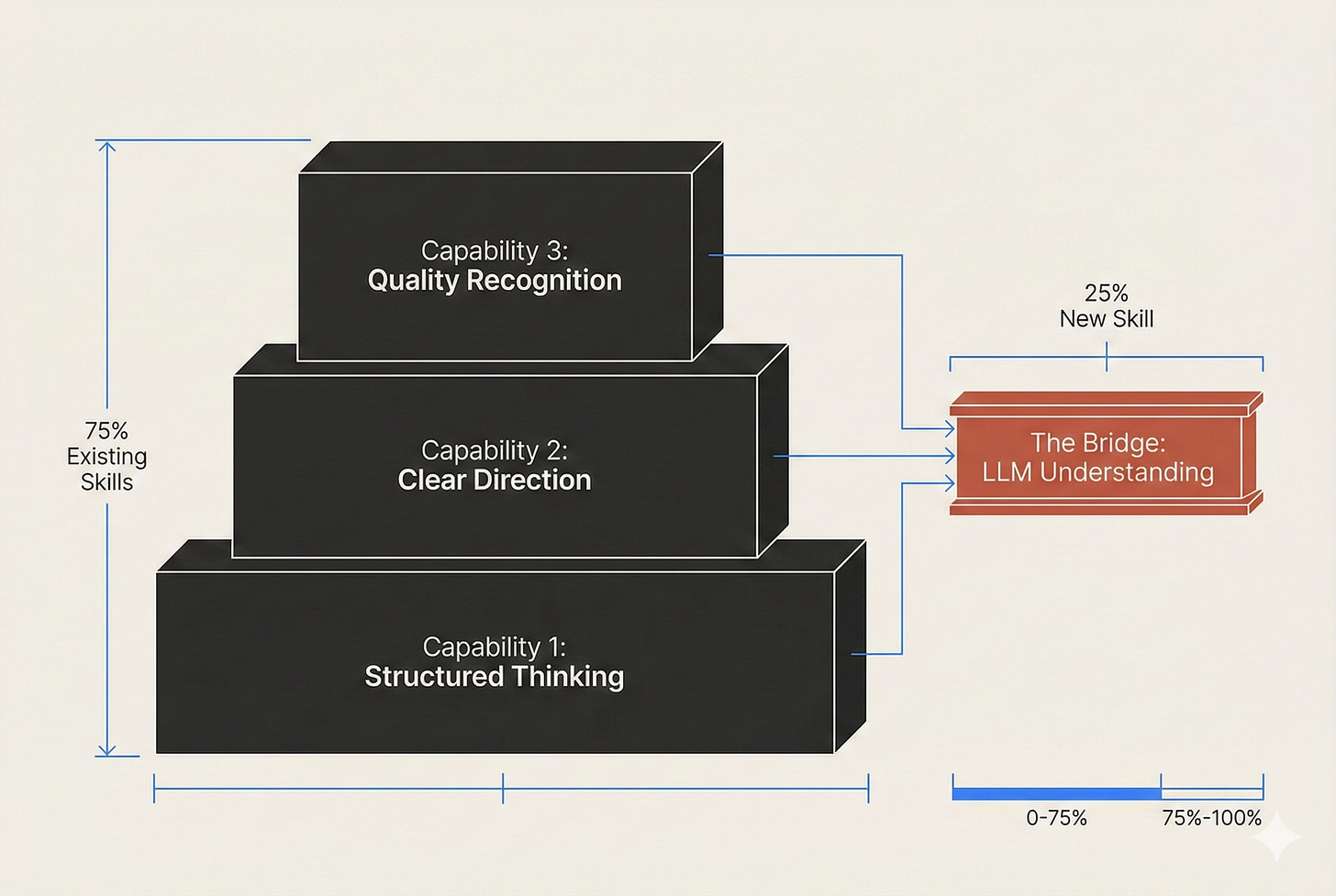

Your Foundation: Three Capabilities You Already Have

These three areas determine most of your effectiveness with AI. None of them are AI-specific. All of them transfer directly from how you already work.

Capability 1: Structured Thinking

Can you take a messy problem and break it into parts? Can you identify what you know versus what you need to figure out? Can you decide what to tackle first?

That’s what drives good AI collaboration. You’re not asking the AI to solve everything at once. You’re directing it through a methodical process: chunk the work, build on each output, adjust as you go.

This is how you’ve always approached complex problems. Investigation. Decomposition. Sequential problem-solving. The only difference now is you’re guiding a machine through those same steps instead of doing them yourself or directing a team.

The better you are at structuring problems, the better you’ll be at getting AI to help solve them. Simple as that.

Capability 2: Clear Direction

Can you explain what you want in a way others can understand and act on? Can you provide enough context without overwhelming? Can you keep collaborators focused when they start to drift?

That’s what prompting actually is: giving clear direction.

Think about what you do when someone on your team misunderstands an assignment. You don’t start over from scratch. You clarify the goal, add missing context, correct their course, and confirm understanding. Same mechanics apply here.

Your prompts work when they do what good communication always does: establish shared understanding, set clear expectations, and guide progress toward a specific outcome. If you’ve ever written a project brief, coached someone through a task, or redirected a conversation that went sideways, you already know how to do this.

The medium changed. The principles didn’t.

Capability 3: Quality Recognition

Can you look at work in your domain and immediately spot what’s good, what’s wrong, and what’s missing? Can you separate signal from noise? Can you tell when something sounds right but is actually off?

That’s your quality filter. And it’s the most underrated part of working with AI.

Two people can run the same prompt. One gets value, the other gets garbage. One person can recognize quality. The other can’t. That’s the difference.

Your domain knowledge determines whether you trust bad output or catch it. Whether you ask follow-up questions that matter or accept generic responses. Whether you apply what the AI produces or recognize when it’s headed in the wrong direction.

Experienced professionals often “get” AI faster than junior people. They can immediately tell when output is useful versus when it’s plausible-sounding nonsense. That’s domain expertise in action.

The Bridge: One New Skill That Connects Everything

This is the 25% that’s actually new. And even this isn’t complicated. It’s just understanding your collaborator.

What You’re Actually Learning:

You need to know that AI generates text probabilistically, one word at a time, based on patterns it learned. This means:

It can sound confident while being wrong

It can drift mid-response and contradict itself

It can’t verify its own accuracy

It has no memory outside the current conversation

Once you understand that, the rest is about risk management. You learn to recognize when AI outputs need verification (high-stakes decisions, unfamiliar domains) versus when you can move fast (brainstorming, first drafts, exploration).

You combine this understanding with your domain expertise (Capability 3) to build reliability checks. You develop a feel for when to trust, when to verify, and when to correct course.

Think of it like this. Your three existing capabilities are your engine. This new understanding is the transmission that connects your engine to the AI’s capabilities. Without it, you can’t transfer power effectively. But the engine itself? You already built that.

The point: 75% of what determines your AI effectiveness comes from professional capabilities you’ve spent years developing. The remaining 25% is learning how LLMs actually work so you can apply those capabilities appropriately.

What This Actually Looks Like in Practice

The framework reveals why some people seem to “get” AI immediately while others struggle.

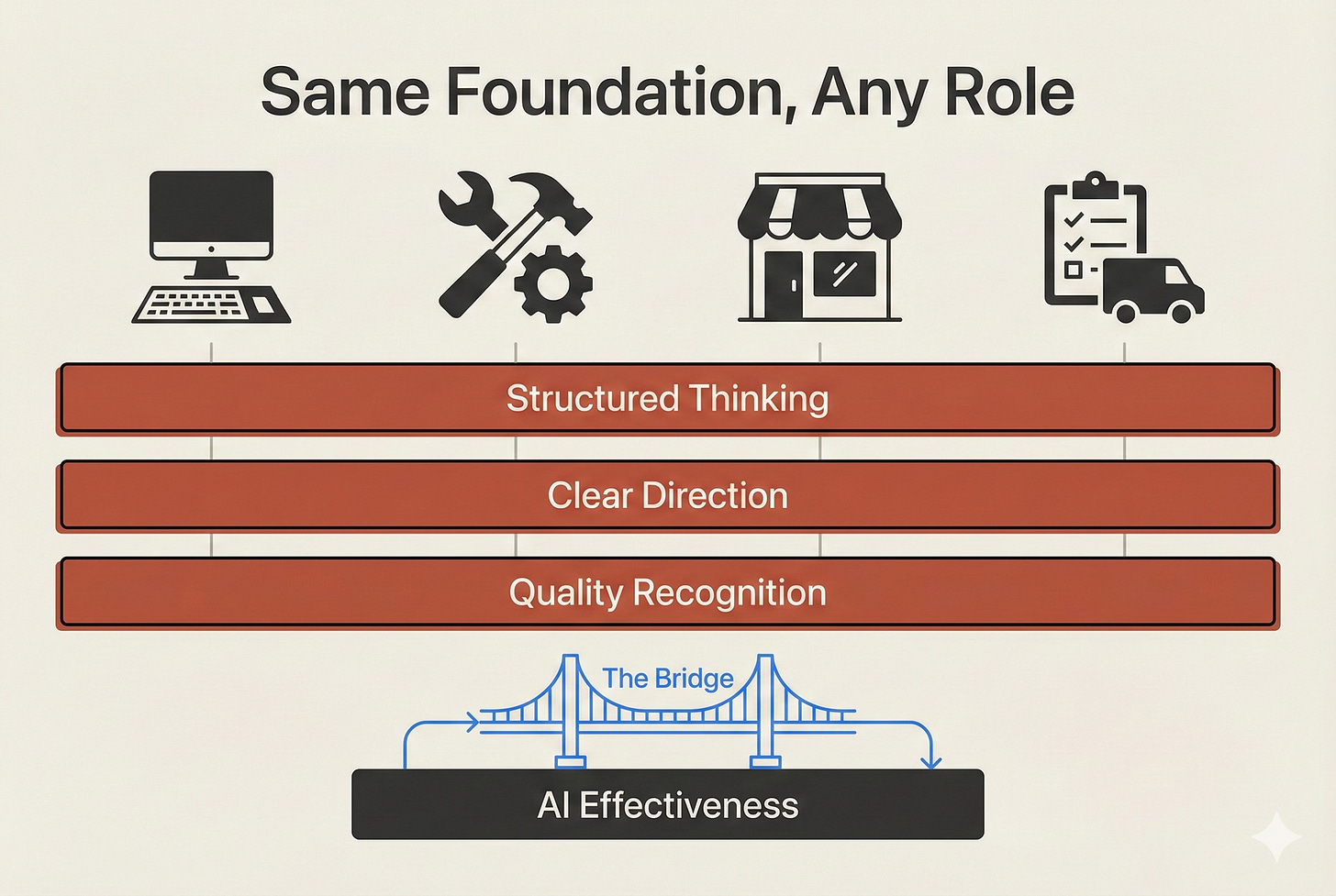

Now watch how this plays out across different roles and industries.

The Project Manager

Sarah already knows how to chunk complex work into phases. She knows how to set clear goals at the outset of a project. She knows how to redirect drifting conversations when her team gets sidetracked.

When she started using AI for project planning, she applied those exact same skills. She recognized that her project management instincts transferred directly. Break down the deliverable. Guide the AI through each component. Redirect when outputs drift off-scope.

She got better outputs immediately. She recognized existing skills.

The Writer

Marcus already knows how to structure arguments. He knows how to use precise language. He knows how to iterate on drafts, tightening logic and improving clarity with each pass.

Those skills transfer directly to prompt refinement and output evaluation. When the AI produces something generic, he recognizes it the same way he’d recognize weak writing from a junior colleague. When the structure feels off, he adjusts his guidance the same way he’d restructure an outline. His writing expertise makes him effective with AI.

The Marketing Analyst

Diana has strong foundation capabilities for research tasks. She knows how to break down complex market questions, structure her investigation, and verify data against what she knows about customer behavior. When she uses AI for research, she’s fluent: confident, effective, getting high-quality results quickly.

But she’s still building confidence with “The Bridge” (understanding LLM behavior) for customer-facing work. She understands verification protocols intellectually, but hasn’t internalized them enough to trust AI outputs that will go directly to customers. So she’s hesitant in that application.

The framework reveals something important: it’s task-specific readiness based on which capabilities are strong for which applications. Diana doesn’t need to “get better at AI” in general. She needs to build verification protocols specifically for customer communications, and she’ll become fluent there too.

The HVAC Technician

James already knows how to diagnose problems systematically. When a customer calls with a heating issue, he doesn’t just guess randomly. He asks targeted questions, rules out possibilities, and narrows down to the root cause. That’s structured thinking in action.

He knows how to explain technical issues to homeowners in plain language. He translates complex HVAC concepts into terms non-technical people can understand and make decisions about. That’s clear direction and communication.

He knows from years of experience when something doesn’t match expected performance. He can look at system behavior and spot immediately when readings are off or components are failing. That’s quality recognition through domain expertise.

Those same skills let him work with AI to generate maintenance schedules that make technical sense, draft clear customer communications that translate complexity appropriately, and verify technical recommendations against his domain knowledge. The gap is just learning basic LLM behavior: understanding probabilistic generation, recognizing when to verify versus trust, building protocols for high-stakes outputs.

He’s applying his HVAC expertise and diagnostic skills through a new interface.

The Retail Store Manager

Maria chunks complex inventory problems into manageable parts. She communicates clearly with suppliers about needs and with staff about priorities. She uses domain knowledge accumulated over years to spot pricing errors or suspicious patterns that newer employees miss.

When she approaches AI for scheduling optimization or customer communication, those existing skills directly apply. She’s 75% of the way there before she types a single prompt.

The Field Service Coordinator

Tom breaks down service routes systematically, considering travel time, job complexity, and technician capabilities. He writes clear dispatch instructions that his team can follow without confusion. He knows from experience when a job estimate seems off (either too high or dangerously low).

All three foundation capabilities, immediately applicable to working with AI for route optimization, communication templates, and estimate verification.

The pattern is clear across every role: the effectiveness comes from applying existing professional capabilities.

How to Start From Where You Are

Now that you see the 75/25 split, here’s how to actually use this framework.

1. Self-Assess Against Your Foundation Capabilities

Copy this into a note and fill it out honestly. It will take you less than 10 minutes and will show you exactly where you stand.

Capability 1 — Structured Thinking:

- Can I break complex problems into component parts?

- Do I have approaches I use to navigate unfamiliar territory?

- Rating: Strong / Developing / New Territory

Capability 2 — Clear Direction:

- Can I write clear briefs or redirect confused conversations?

- Do I structure information so others can follow my thinking?

- Rating: Strong / Developing / New Territory

Capability 3 — Quality Recognition:

- In which domains can I verify accuracy and spot errors?

- Where can I apply best practices and catch mistakes?

- Rating: [List your strong domains]

The Bridge — LLM Understanding:

- Do I understand probabilistic generation and why AI can be confidently wrong?

- Can I recognize when AI is drifting and needs redirection?

- Do I have verification protocols for high-stakes outputs?

- Rating: Strong / Developing / New TerritoryMost people discover they’re Strong or Developing in the three foundation capabilities, and New Territory only in The Bridge. That’s the point. You’re not starting from zero.

2. Start an AI Conversation Using Your Direction Skills

Treat it like briefing someone on a task. You wouldn’t hand off work without context, goals, and constraints. You’d be clear about what success looks like.

Do the same thing with AI. Use this structure:

Try This Prompt

For ChatGPT/Claude/Gemini:

I'm working on [specific goal or problem you want to solve].

Context: [2-3 sentences explaining relevant background, constraints, or what you've tried already]

My role and expertise: [Your domain knowledge, experience level, or perspective on this topic]

What I need from you: [Specific output, thought partnership, analysis, or deliverable you're looking for]

Constraints or preferences: [Format requirements, length, tone, approach, or limitations]

Let's start by [first concrete step or question to begin with].This isn’t a “prompt template” in the traditional sense. It’s how you’d brief any collaborator on a task. You’re just applying that structure to a conversation with AI instead of with a colleague.

Note: This is a collaboration structure template, not a search query. For research questions, see the Perplexity prompts in the P.S. section below.

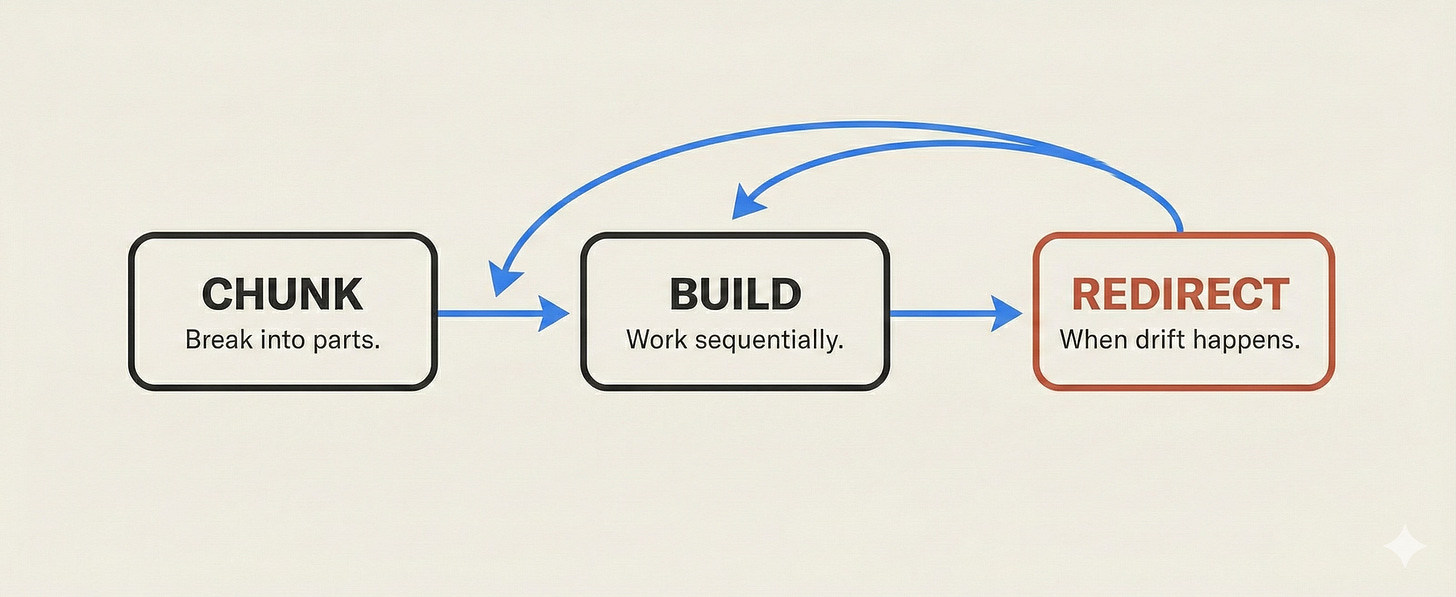

3. Apply the Chunk → Build → Redirect Pattern

You already do this when managing complex work or guiding confused team members. Now apply it to AI.

Chunk: Break the problem into sequential parts. Don’t try to tackle everything at once. Identify distinct phases or components and work through them in order.

Build: Work through one part at a time. Use each output as input for the next. Build momentum and context gradually instead of expecting perfect results from a single massive prompt.

Redirect: When the conversation drifts (and it will), bring it back to the original goal and key constraints. This is exactly what you’d do when a team member goes off on a tangent. You acknowledge the point, then guide back to the objective.

4. Recognize Drift Using Your Management Instincts

You already know how to spot when work is going off-track. You feel it when someone’s contradicting earlier decisions. You notice when things get too abstract and need concrete grounding. You recognize when the thread is lost and you need to reset.

Apply those same instincts to AI conversations.

If the AI starts to:

Contradict earlier guidance → Redirect to original constraints. Remind it what you established at the start.

Go too broad or abstract → Ask for specific, concrete examples. Ground the output in real scenarios.

Lose the thread → Start fresh. Don’t try to salvage a conversation that’s gone completely off the rails. Take the best outputs from the previous thread and begin a new one with clearer framing.

Sound confidently wrong (based on your domain expertise) → Stop immediately. This is where your quality recognition protects you. Verify the claim. Correct the error. Don’t let confident-sounding nonsense pass through just because the AI delivered it with authority.

You’re applying management and quality control skills you already have. The only difference is your collaborator has different failure modes than humans do.

When This Framework Doesn’t Fully Apply

I need to be honest about limitations. This framework is powerful for specific contexts and less useful for others.

Pure technical AI engineering: If you’re building models, fine-tuning systems, or working on AI infrastructure, you need deep technical knowledge beyond these capabilities. This framework is for AI operators, not AI engineers.

Zero domain knowledge territory: If you’re working in a completely unfamiliar domain without expertise to verify outputs, quality recognition is missing. You can still use AI in that situation, but you need to either gain enough knowledge to spot errors, or partner with someone who has domain expertise. Without verification capability, you’re trusting outputs you can’t validate.

Advanced context engineering: Building RAG systems, API integrations, or custom memory architectures requires technical implementation skills. The 75/25 Framework will help you design the approach and understand what you need, but you’ll need engineering support to actually build it.

When you’re avoiding experimentation: This framework helps you recognize readiness, but it doesn’t replace hands-on practice. If you’re waiting for “full understanding” before trying anything, you’ll stay stuck. Recognition plus experimentation is the unlock. Understanding the framework should give you confidence to start, not permission to keep researching.

If you’re already competent and seeking breakthrough: This framework explains how you got to competence, but it won’t break you through to mastery. You need advanced context engineering, sophisticated workflows, and domain-specific techniques beyond these capabilities. This is foundation-to-intermediate territory, not intermediate-to-advanced. If you’re already getting good results and want to reach the next level, you need different guidance than what this framework provides.

What This Framework IS Good For

Everyday AI collaboration for knowledge work

Getting unstuck when you feel “behind”

Understanding why some people seem naturally good with AI (they have strong foundation capabilities)

Building confidence to experiment from your current capability base

Helping teams recognize existing AI readiness

Diagnosing why you’re fluent in some applications but not others

The framework won’t make you an AI expert overnight. It will help you recognize that you’re already most of the way there.

Your Challenge

Open an AI tool right now.

Don’t learn a single new “AI skill” first. Don’t read another article about prompt engineering. Don’t take a course.

Just treat it like directing work. Set a clear goal. Provide context. Guide the conversation. Redirect when it drifts. Use the exact skills you already have.

You’ll be surprised how much of it just works.

The 75% you need? You’ve been building it your entire career.

The 25% that’s new? You’ll learn it faster by doing than by studying.

Start where you are. Stop waiting to be “ready.”

P.S. — Three Starter Prompts That Lean on Your Existing Strengths

Want to see this framework in action? I’ve created three starter prompt templates matched to different capability profiles. Copy-paste ready — just customize the [bracketed sections] for your situation.

Each includes both a conversational AI version (for ChatGPT, Claude, Gemini) and a research version (for Perplexity) so you can use whichever fits your workflow.

1. The Structured Thinker’s Prompt

For people who break down complex problems systematically. This prompt leverages your Capability 1 strength.

For ChatGPT/Claude/Gemini:

I need help thinking through a complex problem systematically.

The problem: [Describe the challenge or question you're working on]

What I know so far:

- [Key fact or constraint 1]

- [Key fact or constraint 2]

- [Key fact or constraint 3]

What I don't know yet:

- [Unknown or uncertainty 1]

- [Unknown or uncertainty 2]

Help me:

1. Break this problem into distinct component parts

2. Identify which parts I should tackle first and why

3. Surface any assumptions I might be making that we should test

4. Suggest a step-by-step approach to work through this methodically

As we work through this, pause after each major step so I can verify we're on the right track before continuing.For Perplexity:

What are proven frameworks and methodologies for breaking down complex [your domain] problems systematically? Include step-by-step problem-solving approaches from 2022-2024 research.2. The Clear Communicator’s Prompt

For people who give great direction. This prompt leverages your Capability 2 strength.

For ChatGPT/Claude/Gemini:

I need help structuring and clarifying communication for [specific audience or purpose].

What I'm trying to communicate: [Your core message or goal]

My audience: [Who they are, their level of familiarity with the topic, what matters to them]

The challenge: [What makes this communication difficult — complexity, sensitivity, confusion, competing priorities, etc.]

What I've drafted so far (if anything): [Paste your rough draft, bullet points, or notes]

Help me:

1. Identify the single most important point I need to get across

2. Structure this message so it's clear and easy to follow

3. Spot anywhere I'm being vague, using jargon, or assuming knowledge they might not have

4. Suggest where I should add context or examples to make this concrete

Give me a revised version, then explain what you changed and why.For Perplexity:

What are evidence-based best practices for clear business communication to [your audience type]? Include frameworks for structuring complex messages and reducing ambiguity from recent communication research.3. The Domain Expert’s Prompt

For people with deep subject knowledge. This prompt leverages your Capability 3 strength.

For ChatGPT/Claude/Gemini:

I'm a [your role/domain expertise] working on [specific task or decision].

My domain knowledge includes:

- [Area of expertise 1]

- [Area of expertise 2]

- [Relevant experience or context]

The task: [What you're trying to accomplish]

What I need from you:

1. Help me apply best practices from [your domain] to this specific situation

2. Ask me clarifying questions about [domain-specific considerations] before suggesting an approach

3. Flag anywhere your suggestions might conflict with [domain standards, regulations, or constraints]

4. When you make recommendations, explain the reasoning so I can verify it against my domain knowledge

I'll correct you if something doesn't align with [industry standards / technical requirements / domain realities]. I need you to adapt based on that feedback rather than defending your original suggestion.

Let's start by you asking me 2-3 questions to understand the specific context before proposing any solutions.For Perplexity:

What are current best practices and emerging standards in [your domain/industry] for [your specific task]? Include peer-reviewed sources and industry publications from the past 2 years.And if you try the framework and discover something surprising about which capabilities came naturally versus which needed work, I'd genuinely love to hear about it. The pattern keeps revealing new nuances.

Good Luck - Dan