Budget for AI Like R&D, Not Software Licenses

Why "Which Tool Should We Standardize On?" Is the Wrong Question (And What to Ask Instead)

“Which AI tool should we standardize on?”

I’ve heard this question from three clients this month alone. One built a 47-page RFP. Another has a committee that’s been evaluating options for 8 months. A third is waiting for “the dust to settle” before committing.

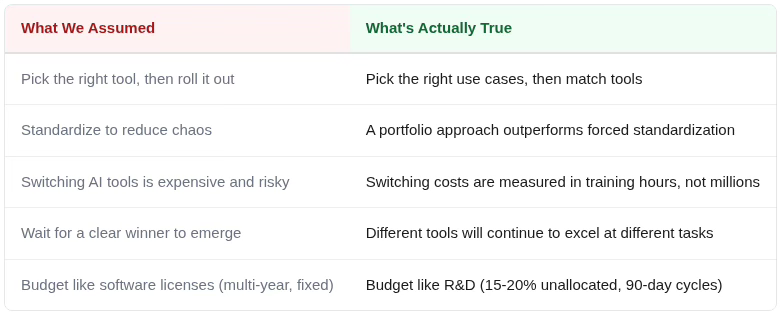

They’re all making the same mistake: applying enterprise software logic to a fundamentally different category.

I spent the last few weeks reviewing 45+ sources (industry reports from Menlo Ventures, McKinsey, Deloitte, Andreessen Horowitz) trying to answer a simpler question: what are organizations that are actually succeeding with AI doing differently?

What I found surprised me. They’re not picking the “right tool.” They’re building the right infrastructure for experimentation.

What you’ll walk away with:

Why the enterprise software playbook fails for AI and the data that proves it

The R&D budgeting mindset including the specific percentage to reserve for experimentation

The 4-Pillar Enablement Framework (policy, data classification, training, guardrails) that enables speed

The Traffic Light System for removing bottlenecks without removing guardrails

The 30-Day Quick-Start Checklist so you know what to do this month

The honest caveats because this approach does have limits

The single sentence version:

Your CRM required a 3-year roadmap. Your AI portfolio requires a 90-day pilot cycle.

That shift changes how you approach every decision that follows.

Why Enterprise Software Logic Fails Here

When you bought your CRM or ERP system, you were making a 3-5 year commitment. Migration costs were real (often millions). Data lock-in was significant. The right play was: evaluate thoroughly, negotiate hard, implement once.

AI tools don’t work that way.

Most AI tools operate on monthly subscriptions ($20-30/user for consumer tiers, usage-based for API access). Model capabilities change quarterly, not annually. Claude, Gemini, and ChatGPT all shipped major capability jumps within 2025 alone.

Andreessen Horowitz found that enterprises in 2024 “were designing their applications to minimize switching costs and make models as interchangeable as possible.” The switching cost conversation has fundamentally shifted.

The data backs this up:

76% of AI use cases are now purchased rather than built internally (Menlo Ventures, 2025)

31% of AI use cases reached full production in 2025, double the 2024 figure (ISG)

Many professionals are running multi-model portfolios: Claude for coding, Gemini for research, ChatGPT for general tasks, Perplexity for citation-backed research

The winners aren’t picking winners. They’re building portfolios matched to use cases.

The 4 Pillars of AI Enablement Infrastructure

Before “budget like R&D” makes sense, you need the right infrastructure in place. Without it, experimentation turns into chaos. With it, experimentation becomes learning.

Pillar 1: Policy (The 2-Page Version)

You don’t need a 100-page manual. Honestly, nobody will read it anyway.

You need clarity on four things:

Where AI can assist vs. where it must be avoided

Where human judgment must lead

What data can and cannot be input

The requirement that AI output be reviewed before use

Sample language: “Employees may use approved AI tools for internal content creation. AI tools may NOT be used with client confidential information or production systems without IT approval. All AI-generated code requires peer review.”

That’s it. A 2-page AI policy beats a 100-page manual because people will actually read it. Clarity enables speed.

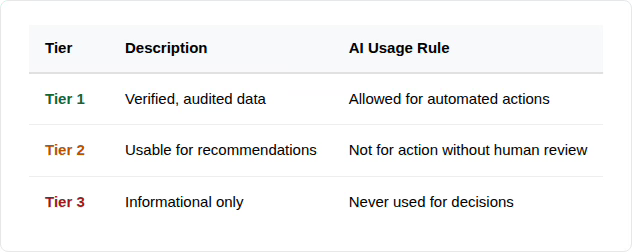

Pillar 2: Data Classification (Trust Tiers)

Not all data is equal. A simple three-tier system prevents confidently wrong automation:

Most organizations have never classified their data this way. Start simple: customer PII is Tier 3. Internal operational data might be Tier 2. Public marketing content is Tier 1.

You can get more sophisticated later. Right now, you need the categories to exist.

Pillar 3: Training (30-45 Minutes)

The training gap is massive. According to industry surveys, 44% of companies don’t use proven learning methods for AI and have no plans to start.

But you don’t need a semester. A 30-45 minute module covering these four areas (with an annual refresh) is the minimum viable investment:

What AI does and doesn’t do

How to interpret outputs

When to override

How to report issues

The point is baseline competence, not expertise. You’re not training data scientists. You’re making sure people know the guardrails exist.

Pillar 4: Technical Guardrails (Enable, Don’t Block)

Here’s the thing about banning AI tools: your employees will use them anyway.

43% already share sensitive data with AI tools without employer permission (Cybsafe/National Cybersecurity Alliance, 2025). Pretending otherwise just means you don’t know what’s happening.

The smarter play is governed alternatives:

Pre-approved tool catalog (start with 2-3)

“Nudge” system that guides rather than blocks

Sandbox environments for experimentation

DLP rules tailored for AI platforms

Governance doesn’t slow experimentation. It removes the uncertainty that’s actually slowing you down. When people know what’s allowed, they move faster.

The Data Behind This

The Shadow AI Reality

80%+ of employees use AI tools for work; fewer than 30% of organizations have formal policies (Ocean Solutions, 2025)

43% of employees share sensitive work information with AI tools without employer permission (Cybsafe/National Cybersecurity Alliance, 2025)

$670,000 premium in additional breach costs for organizations with high unsanctioned AI usage (IBM, 2025)

The ROI Measurement Challenge

97% of organizations struggle to demonstrate GenAI business value (KPMG/Informatica)

Only 51% can confidently evaluate AI ROI (CloudZero, 2025)

Yet 74% say their most advanced AI initiatives meet or exceed ROI expectations (Deloitte, 2024)

What’s Actually Working

91% of SMBs using AI report revenue lift (Salesforce, 2024)

Enterprises are explicitly designing applications to “minimize switching costs and make models as interchangeable as possible” (Andreessen Horowitz, 2025)

15-20% budget reserved for experimentation is the expert-recommended allocation (McKinsey/Google, 2025)

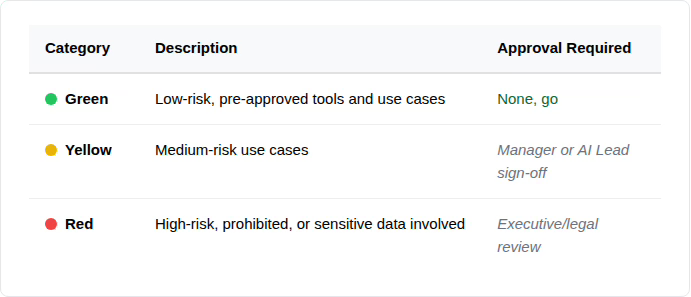

The Traffic Light Classification System

This is the simplest way to remove bottlenecks while maintaining boundaries:

Most day-to-day AI usage should fall into Green. Yellow covers new tools or unfamiliar use cases. Red is reserved for anything touching customer data, production systems, or regulated information.

The power of this system: it removes the “ask permission for everything” bottleneck while keeping guardrails where they matter. People stop waiting. Work gets done.

The 30-Day Quick-Start Checklist

Week 1-2: Foundation

Audit current AI usage (including shadow AI, it’s already happening)

Designate an AI Lead (can be owner, ops manager, or fractional)

Create 1-2 page acceptable use policy

Define data classification (Tier 1/2/3)

List 2-3 approved tools

Week 3-4: Enable

Deploy 30-45 minute training

Communicate policy to all employees

Set up feedback channel for AI questions

Identify 1-2 AI champions per department

Ongoing Rhythm

Quarterly policy review

Monthly AI champion sync

Annual training refresh

The Budget Allocation

Stop thinking in multi-year contracts. Start thinking in 90-day cycles.

Plan for monthly AI subscriptions, not multi-year commitments

Reserve 15-20% of AI budget for experimentation (McKinsey recommendation)

Track AI costs separately from traditional software

Expect usage-based costs to vary; build in contingency

The 15-20% experimentation reserve is critical. As McKinsey put it in their research with Google: “Companies do need some budget that’s just for experimentation. You don’t want too high a bar for just getting your foot in the door.”

The Honest Caveats

I’d be doing you a disservice if I didn’t mention where this approach has limits.

Agentic AI is creating real lock-in.

From Andreessen Horowitz (2025): “As companies invest the time and resources into building guardrails and prompting for agentic workflows, they’re more hesitant to switch to other models... all the prompts have been tuned for OpenAI.”

If you’re building complex autonomous workflows, switching costs rise. The R&D approach works best for tool-level experimentation, not deeply integrated agentic systems.

Regulated industries need more structure.

Healthcare, finance, and government may require the “controlled autonomy” approach rather than “guarded freedom.” The traffic light system still works, but the green zone shrinks.

This doesn’t solve the ROI measurement problem.

Budget flexibility helps, but you still need to define success metrics before deployment. The 97% who can’t prove value aren’t failing because of tool selection. They’re failing because of measurement.

Shadow AI is a security risk, not just a governance inconvenience.

The $670K breach cost premium is real. This framework helps, but it’s not a substitute for proper security controls.

So What Now?

Stop asking “which AI tool should we use?”

Start asking:

Which use cases would benefit most from AI?

What infrastructure makes experimentation safe?

How do we measure success in 90 days, not 3 years?

The organizations winning with AI aren’t the ones who picked the “right” tool. They’re the ones who built the right conditions for learning.

Your CRM required a 3-year roadmap. Your AI portfolio requires a 90-day pilot cycle.

That’s not a limitation. That’s your advantage.

P.S. If you’re already dealing with shadow AI (and statistically, you are: 43% of your employees have shared sensitive data without approval), start with the audit. You can’t govern what you can’t see.

And if you want to share this with your leadership team, here’s the executive summary: Budget like R&D, not software licenses. Reserve 15-20% for experimentation. Measure in 90-day cycles. Put four things in place: a 2-page policy, data classification, 30-minute training, and technical guardrails that enable rather than block.

That’s it. That’s the strategy.

Try This Prompt

Create an AI Acceptable Use Policy

For ChatGPT/Claude:

You are tasked with generating an AI Acceptable Use Policy document for an organization. The target audience is all employees, so the tone should be clear, concise, and easily understandable. The document should be formatted for readability, using a heading structure to delineate key sections. The final document should be approximately 1-2 pages in length. Use Markdown formatting.

Begin with a level 1 heading (H1) for the policy title: "AI Acceptable Use Policy." Follow this with a brief introductory paragraph explaining the purpose of the policy: to ensure responsible and ethical use of AI tools within the organization, to protect sensitive data, and to comply with relevant regulations.

The first major section, under a level 2 heading (H2) titled "Approved AI Tools," should list the AI tools currently approved for use by employees. [Illustrative Example: Approved tools include Grammarly for grammar checking, Otter.ai for transcription, and internal AI-powered data analysis dashboards]. For each approved tool, include a brief description of its intended use. State clearly that only approved tools are permitted for company-related tasks unless explicit approval is granted.

The second section, under a level 2 heading (H2) titled "Data Usage Guidelines," outlines what data can and cannot be used with AI tools. Emphasize the importance of protecting sensitive and confidential information. Provide specific examples of prohibited data types. [Illustrative Example: Do not input Personally Identifiable Information (PII) such as customer names, addresses, social security numbers, or financial data into any AI tool unless it is explicitly approved for handling such data and has appropriate security measures in place. Company confidential information, such as trade secrets, financial projections, and unpublished product roadmaps, are also prohibited]. Include a statement that employees are responsible for ensuring that all data used with AI tools complies with the company's data privacy and security policies.

The third section, under a level 2 heading (H2) titled "Human Review Requirements," details the requirements for human oversight of AI-generated outputs. Explain that AI outputs should not be treated as definitive and always require human review for accuracy, completeness, and appropriateness. Specify situations where human review is especially critical. [Illustrative Example: AI-generated content intended for external communication, such as marketing materials or customer support responses, must be reviewed by a human editor before publication. AI-driven analysis used for decision-making, such as sales forecasts or risk assessments, must be validated by a subject matter expert]. State that the level of human review should be commensurate with the risk associated with the AI's output.

The fourth section, under a level 2 heading (H2) titled "Requesting Approval for New Tools and Use Cases," describes the process for employees to request approval for new AI tools or novel applications of existing tools. Provide clear instructions on how to submit a request, what information to include, and who is responsible for reviewing and approving the request. [Illustrative Example: Requests should be submitted to the IT department via email at AI-Requests@example.com and include a description of the tool, its intended use, the data it will access, and the potential risks and benefits. The IT department will review the request in consultation with the Legal and Security teams]. Include an estimated turnaround time for review. [Illustrative Example: The review process typically takes 5-7 business days].

Conclude with a level 2 heading (H2) titled "Policy Violations." This section should clearly state the consequences of violating the AI Acceptable Use Policy, which may include disciplinary action, up to and including termination of employment. Emphasize that all employees are responsible for adhering to this policy and reporting any suspected violations.For Perplexity:

Research the Full Policy

What are the essential components of an AI acceptable use policy for organizations in 2024-2025? Include examples from companies that have published their policies and any regulatory requirements.Research: Approved Tools Section

What AI tools are commonly approved for enterprise use in 2024-2025 and what criteria do IT departments use to evaluate and approve AI tools for employee use?Research: Data Usage Guidelines

What types of data should be prohibited from AI tools in corporate policies? Include examples of data breaches or incidents caused by employees inputting sensitive data into AI systems.Research: Human Review Requirements

What are best practices for human oversight of AI-generated content in business settings? Include guidance on when human review is legally or ethically required.Research: Approval Process

How do organizations structure the approval process for new AI tools? Include turnaround times, stakeholders involved, and risk assessment frameworks used by IT and legal teams.Research: Policy Violations

What are typical consequences for AI policy violations in corporate settings and how do companies handle employee misuse of AI tools?The Dare

Pick one pillar from the framework. Just one. Before the end of this week, take a single action on it:

Policy: Draft a one-paragraph AI use statement

Data Classification: List your five most sensitive data types

Training: Schedule 30 minutes to watch one AI capabilities video with your team

Guardrails: List what AI tools employees are already using

One pillar. One action. This week.

Good Luck - Dan